At the moment, I'm fortunate to live in a part of the world where visible Northern Lights are fairly common. The problem is though, they may only show up for a few minutes during the night so you have to either be a real night-owl or get lucky. I wanted to build something to help me catch the Northern Lights more often.

The idea mostly came after seeing the recent release of the Raspberry Pi camera module 3 and wanting an excuse to do something with it.

In this blog post I'll go over the proof of concept of the idea and how I hope to develop the project into something a little nicer.

I already had a bit of a head-start on the some of the infrastructure from a previous project of mine, a Raspberry Pi baby monitor. It's a two-part setup a camera-equipped Pi Zero W and a separate server component running on my home server. The Pi Zero is a 'dumb' piece that only takes pictures every few seconds and sends it via http, while all the business logic of collecting, storing, and displaying pictures sits on my server. This makes it easy to unplug and move the camera around without causing too much trouble to the whole system's operation.

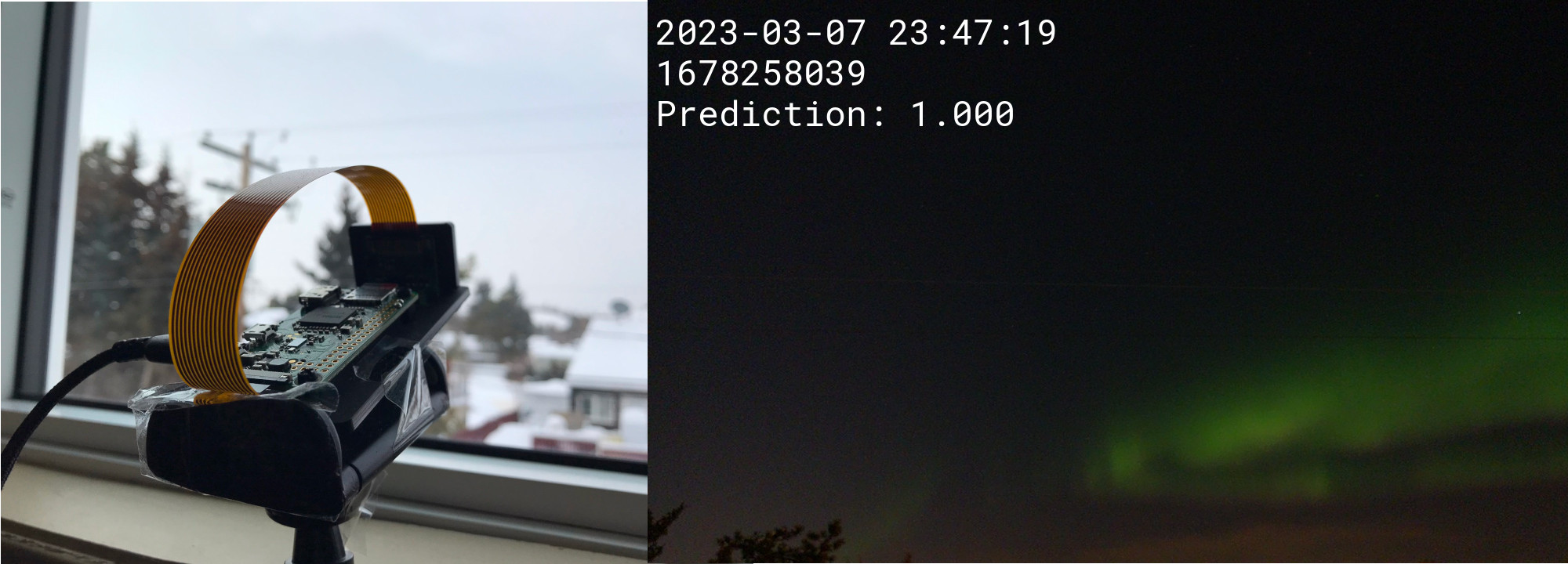

For this particular project, my Pi Zero got a camera 3 upgrade and was pointed straight at the sky out my north-facing, upstairs window. It captures a picture every 5 seconds and sends it to my home server where it's stored for later; most nights capturing about 7000 images. Each image is stored in a folder and metadata about the image is written to a small sqlite3 database. To label the images, I was able to use my computer's image previewer to quickly scrub through a nights worth of images and mark them in the database as either containing Northern Lights or not. It only took about 30 minutes per night but it was pretty boring work none-the-less.

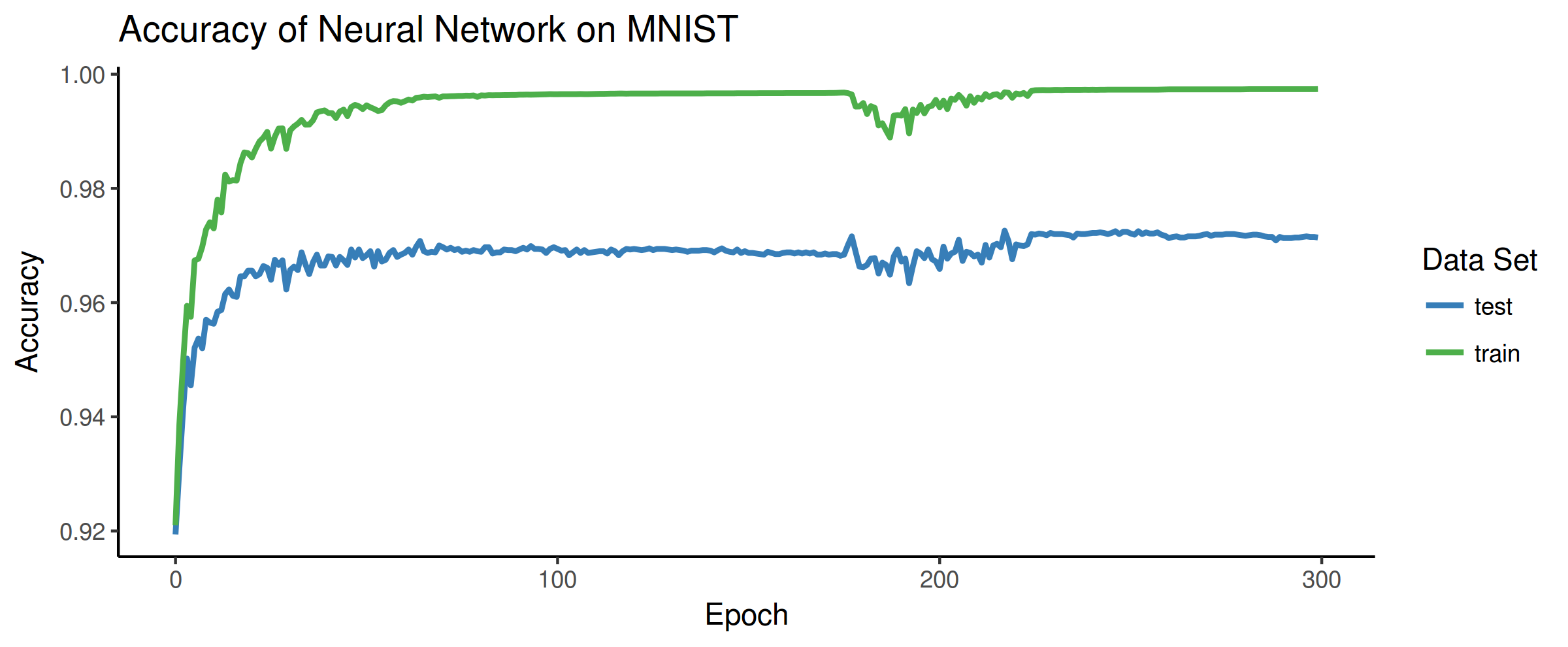

With labelled images, I could sort them into a proper training dataset of 'aurora' and 'sky' pictures. I then trained a large convolutional neural network built using the Keras Python package to classify the pictures. The Keras documentation has a nice article called 'Image Classification from Scratch' which was a good starting point for this task. It shows how to build a model to classify pictures as either dogs or cats, so it was fairly straightforward to adapt it to the task of classifying 'Aurora' or 'No Aurora'.

Below is a YouTube video showing a full night of particularly active Northern Lights. The 3rd number in the top-left of each image is the prediction from the trained neural network, the closer to 1 the number is the more confident the model is that the picture contains Northern Lights. None of the pictures in this timelapse were used in training the model. Skip ahead to 0:50 to see the Northern Lights in action!

With the concept proven, I'd like to build the whole project out a little bit more. The step 2 is to build a proper webapp that can receive pictures from the Pi, classify them, and display them. The best pictures (according the model) will get saved, and on really good nights it should send me a text message or some other notification. It will probably be a bit tricky to come up with a good heuristic for this, as sometimes Northern Lights will be gone within a few minutes.

Step 3 is to build a better enclosure for the Raspberry Pi so that I can move it outside to a more permanent location. I'm more of a software person so I haven't thought too much about this. If anybody has any god suggestions please let me know.

That's it for now. Thanks for reading. I'll try to put the code and training pictures on GitHub soon.

Update

I've been playing a bit with Google Colab and thought it would be a good way to share the training code and data for this project.

Python Notebook: https://colab.research.google.com/drive/1CFNfKZH_WyrGN71t4Jx4NU-FzBTAeZP5?usp=sharing

Training Images (1.4GB): https://drive.google.com/file/d/1N8uuIMo6AzM6SGoTBQh7iWK_SGIOyh6n/view?usp=sharing